A typical Code Jam problem requires at least 2 solution submissions: a small set and a large set. Expect the large set to have more test cases, larger inputs, and an 8 minute time limit. Normally, this means that the brute force algorithm you wrote for the small set is useless on the large set.

Normally.

A previous post looked at the Bullseye problem in Rust. I’m going to revisit it with Go language. My base machine will be a fairly respectable “CC2 Cluster Compute” EC2 instance:

- (cc2.8xlarge)

- 88 ECUs

- 16 Cores

- 60.5 GiB

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | |

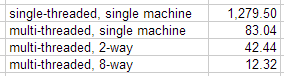

After porting the O(N) simplistic summing solution over from Rust and utilizing only one core, 1000 large test cases ran in 1280s. In the large set, there are a total of 6000 cases, 4000 of which involve large numbers, so roughly speaking, this solution would take 1 hour and 25 minutes. One of Go’s main features is its very simple parallelization via channels and goroutines. Goroutines let us fan-out our solver to multiple threads. Channels help us fan-in their responses to a single array in a threadsafe manner.

Here, we let the program use all logical processors. NumCPU detected 32 in this case (perhaps the 16 cores are hyperthreaded):

1

| |

Spawn one solver function per input. Note that the solver above does not return a value but writes it to the provided channel:

1 2 3 4 5 | |

Returned values are collated into an answer slice and placed in order:

1 2 3 4 5 | |

Executing this brought us to 83 seconds: a 15x improvment. Not bad. There’s a limit to how much scaling can be done on a single machine, so our next step is to use a cluster. If we divide the test cases out to 2 machines, theoretically, we should cut that time in half.

Data is grouped into 2 batches in this case. I’ve tried to distribute the load evenly among the nodes to ensure that no one gets the lucky easy half:

1 2 3 4 | |

Kick off a sender for each batch and don’t wait for a response:

1 2 3 | |

Batches are serialized and sent to the node. When the results come back, send them back over the Result channel one at a time:

1 2 3 4 5 6 7 8 9 10 11 12 13 | |

The Node Mode code. Listen for connection, deserialize the batch and spawn solvers. In Single mode we extracted the answer into a []uint64 but we need to return this batch to the Master node so we’ll keep it in a []*Result and let it sort things out:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 | |

Executing this on 2 machines yielded 42s, very close to our theory. Since I’d gone this far, why not see what 8 machines would do? Here are the results from all 4 executions:

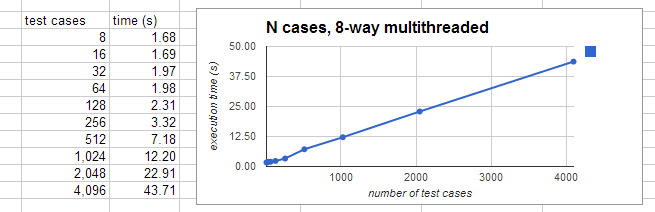

Enabling multithreading and 8-way parallelization brought us from 1280s to 12s, a 100x improvement; very good. Now that we’ve got our cluster up and running, here are execution times for various size loads:

And finally, all 6000 cases of the large set executed in 43.38s. This is lower than the 4096 cases tested because for those tests I was repeating a single case which was perhaps more complex on average than the cases in the large set.

Possible enhancements:

- Allow some tolerance for TCP delays using timeouts and retries.

- Improve load balancing by dividing batches into smaller chunks. When a node becomes available, send it a new batch.

- Provide failover. If one node refuses to connect, or if it doesn’t return its results within the expected time, strike it and give its work to another node.

- Create logging for debugging remote node.

- General error handling; currently, nothing is checked.

I didn’t find language in the Terms and Conditions that prohibited the use of clusters (of machines; clusters of humans are a no-no). However, Code Jam is clearly focused on elegant expression of a problem using code and on algorithmic efficiency. I’d guess that code executing on a single machine - multi-threaded or otherwise - falls within the spirit of the contest; the computing power of a single machine is fairly predictable. Once clusters are in play, there’s too much variability among contestants (e.g., access to server farm, finances to run EC2 cluster, using a stone-age language limited to single threading * cough * javascript). The playing field against an underprivileged coder becomes a little imbalanced. At any rate, the finals provide only a single machine with no internet, IIRC.

This was merely a POC to mess around with Go’s concurrency mechanisms, but for those of you who need just that extra edge, the code is up on github: clusterjam.go.